LocalAI Embeddings

Overview

LocalAI Embeddings is a powerful feature in AnswerAI that allows you to use local embeddings models, such as those compatible with the ggml format. This feature enables you to run Language Learning Models (LLMs) locally or on-premises using consumer-grade hardware, providing a more private and customizable alternative to cloud-based solutions.

Key Benefits

- Privacy and Security: Run embeddings locally, keeping your data on your own hardware.

- Customization: Use a wide variety of models compatible with the ggml format.

- Cost-effective: Eliminate the need for expensive cloud API calls by using your own computing resources.

How to Use

Step 1: Set up LocalAI

-

Clone the LocalAI repository:

git clone https://github.com/go-skynet/LocalAI -

Navigate to the LocalAI directory:

cd LocalAI -

Download a model using LocalAI's API endpoint. For this example, we'll use the BERT Embeddings model:

- Verify that the model has been downloaded to the

/modelsfolder:

-

Test the embeddings by running:

curl http://localhost:8080/v1/embeddings -H "Content-Type: application/json" -d '{

"input": "Test",

"model": "text-embedding-ada-002"

}' -

You should receive a response similar to this:

Step 2: Configure AnswerAI

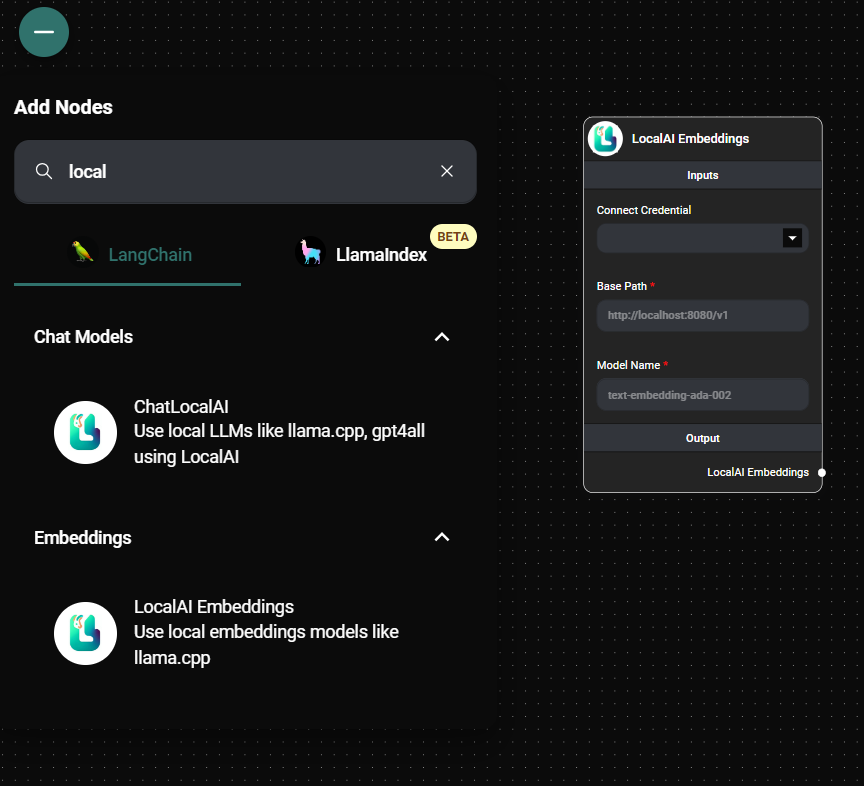

- In the AnswerAI canvas, drag and drop a new LocalAIEmbeddings component:

LocalAIEmbeddings Node & Drop UI

- Fill in the required fields:

- Base Path: Enter the base URL for LocalAI (e.g.,

http://localhost:8080/v1) - Model Name: Specify the model you want to use (e.g.,

text-embedding-ada-002)

- Base Path: Enter the base URL for LocalAI (e.g.,

Tips and Best Practices

- Ensure that the model you specify in AnswerAI matches the one you've downloaded to the

/modelsfolder in LocalAI. - Keep your local models up to date to benefit from the latest improvements in embedding technology.

- Experiment with different models to find the best balance between performance and resource usage for your specific use case.

Troubleshooting

- Model not found error: Make sure the model name specified in AnswerAI exactly matches the filename in the

/modelsfolder of LocalAI. - Connection issues: Verify that LocalAI is running and accessible at the specified base path.

- Slow performance: Consider using a more powerful machine or optimizing your LocalAI setup for better performance.

For more detailed information on LocalAI and its capabilities, refer to the LocalAI documentation.