ChatLocalAI Integration

Overview

ChatLocalAI is a powerful feature in AnswerAI that allows you to run language models locally or on-premise using consumer-grade hardware. It provides a drop-in replacement REST API compatible with OpenAI API specifications, supporting multiple model families in the ggml format.

Key Benefits

- Run language models locally without relying on external cloud services

- Maintain data privacy and security by keeping everything on-premise

- Reduce costs associated with cloud-based AI services

- Support for various model families compatible with the ggml format

How to Use

Setting up LocalAI

-

Clone the LocalAI repository:

git clone https://github.com/go-skynet/LocalAI -

Navigate to the LocalAI directory:

cd LocalAI -

Copy your desired model to the

models/directory. For example, to download the gpt4all-j model:wget https://gpt4all.io/models/ggml-gpt4all-j.bin -O models/ggml-gpt4all-j -

Start the LocalAI service using Docker:

docker compose up -d --pull always -

Verify the API is accessible:

curl http://localhost:8080/v1/models

Integrating with AnswerAI

-

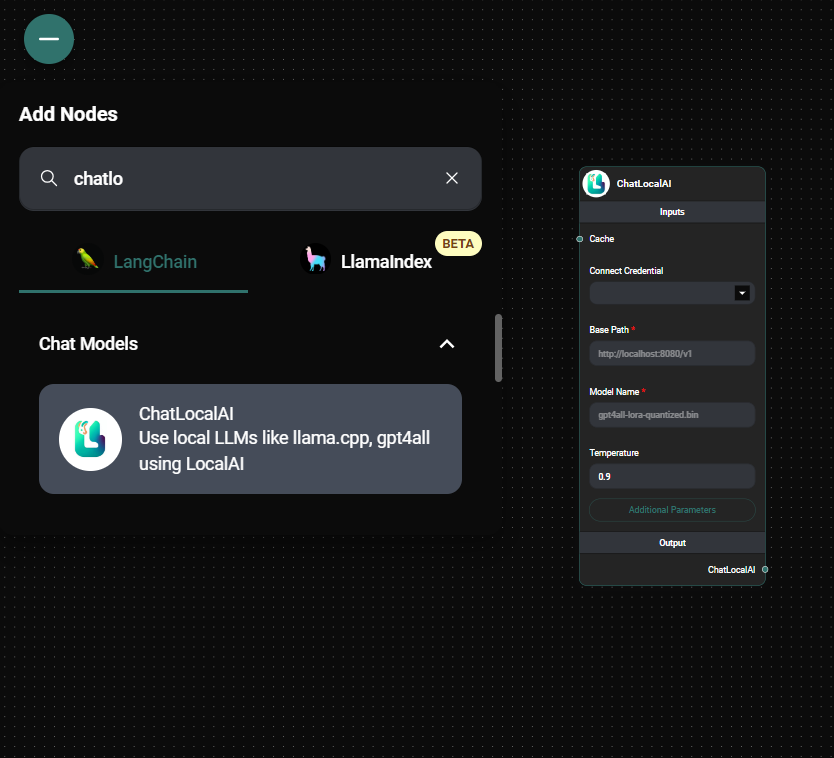

Open your AnswerAI canvas.

-

Drag and drop a new ChatLocalAI component onto the canvas.

ChatLocalAI Node & Drop UI

-

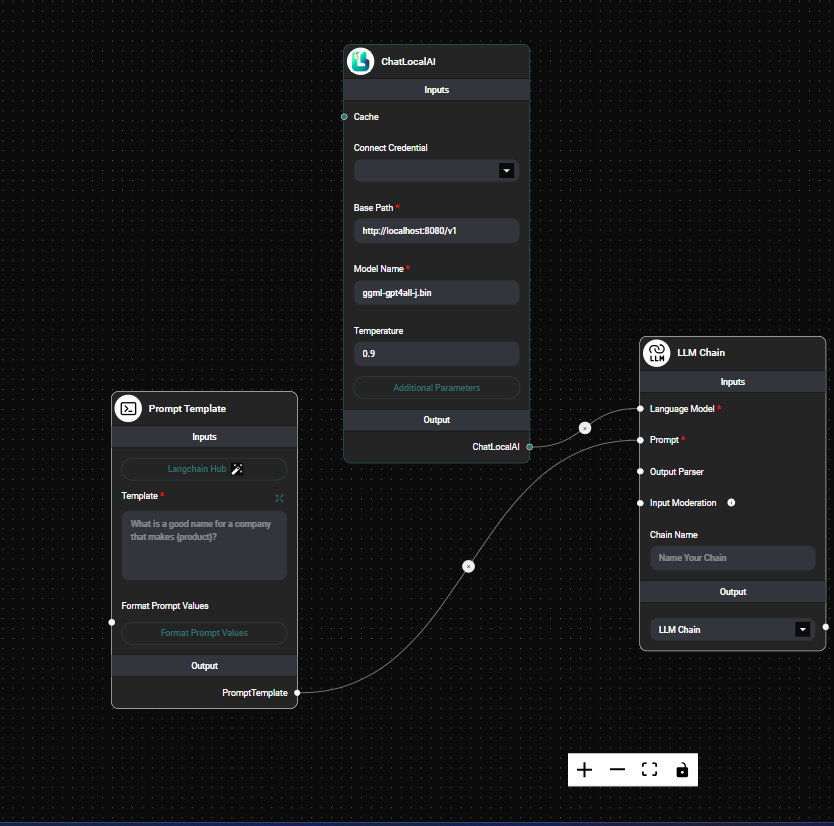

Configure the ChatLocalAI component:

- Set the Base Path to

http://localhost:8080/v1 - Set the Model Name to the filename of your model (e.g.,

ggml-gpt4all-j.bin)

ChatLocalAI Node Configuration & Drop UI

- Set the Base Path to

Tips and Best Practices

-

Experiment with different models to find the best balance between performance and resource usage for your specific use case.

-

If you're running both AnswerAI and LocalAI on Docker, you may need to adjust the base path:

- For Windows/macOS: Use

http://host.docker.internal:8080/v1 - For Linux: Use

http://172.17.0.1:8080/v1

- For Windows/macOS: Use

-

Regularly update your LocalAI installation to benefit from the latest improvements and model compatibility.

-

If you prefer a user-friendly interface for managing local models, consider using LM Studio in conjunction with LocalAI.

Troubleshooting

-

Issue: Cannot connect to LocalAI API Solution: Ensure that the LocalAI service is running and that the base path is correct. Check your firewall settings if necessary.

-

Issue: Model not found Solution: Verify that the model file is present in the

models/directory of your LocalAI installation and that the model name in AnswerAI matches the filename exactly. -

Issue: Poor performance or high resource usage Solution: Try using a smaller or more efficient model, or upgrade your hardware if possible.

For more detailed information and advanced usage, refer to the LocalAI documentation.